On the previous wiki page we have discussed the various types of redundant vDevs - Mirrors or RAIDZ1/2/3.

This page discusses some other, special, types of vDev that ZFS provides for improving performance in specific circumstances:

- Level-2 Adaptive Replacement Cache (L2ARC) - as opposed to standard Adaptive Replacement Cache (ARC) that comes as standard in normal RAM

- Synchronous Log (SLOG)

- Special (metadata) vDev

- Deduplication

To save you some time and effort in reading and digesting the remainder of this page, here are some general rules of thumb as to why most people don't need special vDevs at all:

- These special vDevs are designed to enhance performance for high intensity I/O environments. Unless you have one of these special performance needs, these vDevs will not improve your perceived NAS speeds, may actually reduce performance instead and will certainly add complexity to your pool (increasing the number of links in the chain that can break, and make recovery potentially more complex when things do go wrong), and will cost you money for no real benefit.

- For general home / very small business usage (PC files, media streaming) normal HDD performance with a sufficient standard ARC will give great performance using RAIDZx without using any of these special vDev types. If you think that performance is impacted by low ARC hit rates, adding more memory may be both the cheapest and most effective way to improve performance. In many cases, you will be limited more by LAN bandwidth than by your NAS disk performance.

- Each of these special devices is designed to ease a specific I/O bottleneck that appears with a specific type of workload (use case). Don't even think of implementing them just because you can, but rather only if you know you have the specific use case that they are designed to fix, and only if you have the right type of hardware to make the implementation effective.

- There is no point in using any of these special device types to speed up a pool which is using the same technology. SATA SSDs are a few orders of magnitude faster than HDDs, and NVMe devices a few orders of magnitude faster than SSDs - so if you want these special devices to have a significant impact, then aim for NVMe, though SATA SSDs can still provide performance improvements for HDD pools in the right situations.

In the vast majority of cases, none of these special disks are either needed or a good idea, and those use cases that need them are usually pretty specialised. However it is possible that you do have one of these use cases, in which case one or more of these may be of use to you.

If you really feel that you need any of these special disks, you should really be considering the use of NVMe disks (which are substantially faster than SATA SSDs), in which case you may need motherboards with multiple native NVMe slots (i.e. each of which has a direct connection to a dedicated CPU PCIe lane). If you decide to use e.g. PCIe cards with multiple NVMe slots on them, then do make sure that the card supports sufficient PCIe lanes to support the performance needs of those NVMe slots.

¶ L2ARC

As previously stated, ZFS comes with an Adaptive Replacement Cache (ARC) as standard which uses all the otherwise unused memory in your system as a cache for both metadata (data about where your actual files are stored) and the most recently read actual data so that if it is requested again then the request can be satisfied from memory rather than having to go to disk, which is several orders of magnitude faster/slower.

The most important data to be held in ARC is the metadata because this relatively small amount of data is needed to locate where the actual data is stored on disk and it is therefore used relatively frequently. Holding actual data in ARC is less important in most home/small business servers, partly because once the first network request has been sent, ZFS keeps pre-fetching the rest of the file and storing it in cache in anticipation that the remainder of the file will be requested next - and thus all subsequent requests end up being satisfied from ARC.

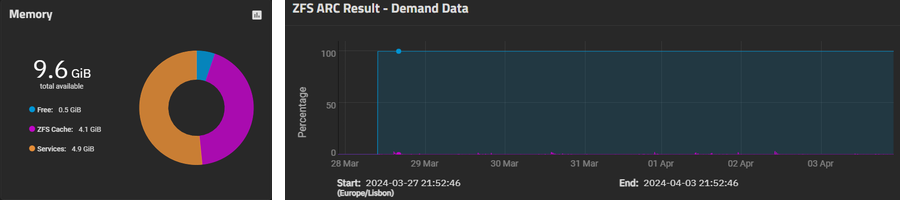

The consequence is that even relatively moderate amounts of ARC can still often provide very high hit ratios in a typical home / small business environment - the authors very modest 5GB ARC delivers c. 99.5% hit rate.

If your ARC hit ratio is not as high as you would like, then adding more memory to your system can easily be the cheapest and most effective way to improve your overall I/O performance.

Because it is held in RAM, standard ARC is not persistent - in other words it does NOT survive a reboot or power off and needs to be repopulated from original disks every time these happen.

A Level 2 Adaptive Replacement Cache (L2ARC) is an SSD (NVMe or SATA) which stores copies of the most frequently accessed metadata and data blocks so that they can be used to populate the ARC faster then reading from slower HDD (or SSD) disks, and L2ARC is persistent across reboots and power downs.

The downside of an L2ARC is that it reduces the amount of memory available for standard ARC because the L2ARC index needs to be held in memory.

Don't consider an L2ARC unless your server has at least 60GB of free memory after O/S, services, apps and VMs are loaded.

So, for some specific workloads which have occasional but repeating access to the same data, then an L2ARC can potentially be quite effective. Potential examples:

- VMs - which used the same blocks from disk every time they boot

- …

¶ Checklist

There is no point in adding an L2ARC unless:

- Your cache hit ratios as shown in your storage reports show that you have a significant proportion of I/Os which are not satisfied from cache.

Unless you have a large amount of storage, a significant proportion of which is frequently and randomly read, you will be surprised just much is satisfied from the cache and how little from Hard Disk - it doesn't take a lot of activity to populate the cache with the metadata, and sequential reads of large files (e.g. for streaming audio or video) will be pre-loaded and satisfied from cache. - Consider first adding normal memory as a means of improving your cache hit ratio.

- You have a noticeable read performance issue, traceable directly to having to wait for data from HDD, and not due to other causes such as network bandwidth.

- You have at least 64GB of memory - you need to store the metadata of the L2ARC in main memory, and performance will likely go down if you have < 64GB because of this.

- You cannot simply put the data itself onto SDD (due to size constraints)

- L2ARCs are not essential to the integrity of a pool - so they do not need to be resilient i.e. mirrored and your pool will continue operating (with reduced performance) if the L2ARC vDev fails, and if you add one it can be easily removed at a later date if you find it is not needed.

- L2ARCs can be configured to store e.g. only metadata blocks and not data blocks - and so a relatively small L2ARC (with a small impact on ARC size) can still provide some of the performance benefits of a Metadata special vDev without the same resiliency requirements.

¶ SLOG

As you might expect if you understand the full name, a Synchronous Log (SLOG) can provide performance benefits for response time critical or I/O intensive synchronous writes.

- Synchronous writes are where the client that is writing data needs to be notified when the data is physically committed to persistent storage i.e. it will not be lost in the event of a crash or power outage. Examples where this is absolutely necessary are databases e.g. so that financial transactions or online orders are never lost once they are confirmed.

- However there are many situations where writes are sent as a synchronous write but this commitment is not necessary. In these situations it is possible to configure e.g. the Zvol or Dataset as being asynchronous and / or the NFS share as asynchronous and / or the client mount of that share as asynchronous.

- SMB access from Windows is also always asynchronous.

In an asynchronous write, ZFS holds the data in memory until it can write it to disk (usually holding it in memory no more than a couple of seconds), and it sends a success response to the client immediately.

In a synchronous write, ZFS writes the data to disk (in a special area called a Zero-Intent-Log or ZIL), and only when this is complete does it send a success response to the client, and then when convenient it then transfers the data from the ZIL to the correct blocks on disk. So for synchronous writes, two separate writes are actually performed, first to ZIL, then to the data blocks, and this can be a limiting factor to the number of I/O writes that can be processed in intensive write environments.

Normally the ZIL is on the same disks as the data. With an SLOG, the ZIL is on a separate SLOG device, normally substantially faster than an HDD. This provides two benefits:

- The time required to write to the ZIL will be substantially less than to HDD, and thus the success response is sent to the client far quicker.

- One of the two I/Os for each write is to the faster SLOG device, and only half the number of writes are made to the data devices - so the peak capacity of client writes is going to be higher.

¶ Checklist

An SLOG will not benefit:

- SMB writes from Windows machines

- Any clients which can be explicitly marked as asynchronous

- App writes to datasets marked as asynchronous

- VM / iSCSI writes to Zvols marked as asynchronous

- Low volume, response time non-critical writes from any other client

An SLOG will potentially benefit:

- Medium- to high-volume writes (e.g. bulk file copies/moves) using e.g. NFS or SMB from Macs

- App writes to datasets which are not marked as Asynchronous

- VM / iSCSI writes to Zvols which are not marked as Asynchronous

- Relatively low-volume synchronous writes which are not response-time critical

In practice, there is no point in adding a SLOG unless:

- You have a noticeable write performance issue, traceable directly to having to wait for data to be written to HDD, and not due to other causes such as network bandwidth.

- You are definitely doing synchronous writes and do not want to turn these off for data integrity reasons

- You cannot simply put the data itself onto SDD (NVMe or SATA) due to large data size

- If you are going to add an SLOG disk, then it must be SSD (SATA or NVMe).

- If it is important to ensure synchronicity, then although an SLOG will only hold the last few seconds of writes, it is nonetheless essential to the integrity of a pool. Consequently an SLOG should be mirrored.

Additionally:

- Because SLOGs only hold ZIL data temporarily, they can be relatively small.

- If you add an SLOG, it can be easily removed at a later date if you find it is not needed.

¶ Special (Metadata) vDev

ZFS normally stores the metadata for your storage area - which describes which files are where - as part of the storage area itself. However you can use a Special vDev to store this metadata for HDD storage areas separately on a faster SSD (NVMe or possibly SATA) storage medium, and this will speed up how quickly TrueNAS can locate where on the HDD your data is stored.

This is very different to a metadata-only L2ARC which holds a copy of the metadata. A Special (Metadata) vDev holds the primary and ONLY copy of the pool's metadata, and if this vDev is lost (e.g. because it is non-resilient and the only device it is held on fails) then the entirety of the pool's data will be irrevocably lost too.

For this reason it is essential that a Special (Metadata) vDev if 2x (or even 3x) mirrored.

¶ Checklist

In practice, there is no point in adding metadata SSDs unless:

- You have a noticeable storage performance issue, traceable directly to having to wait for metadata to be read from HDD, and not due to other causes such as network bandwidth.

- You cannot resolve this performance problem using either more main memory for cache.

- You cannot simply put the data itself onto SDD (due to size constraints)

- You understand the risks of full data loss if the metadata is lost.

- Your hardware can support the resources needed for them i.e. dual NVMe slots.

Additionally:

- If you are going to add metadata disks, then they must be SSD (SATA or NVMe) and they must be mirrored.

- User @Constantin has undertaken some specific benchmarking of SvDevs, and suggests (in a somewhat technical article here) that they will be beneficial for rSyncs but also as an alternative to L2ARC or SSD help data for small files.

- Once a Special (Metadata) vDev is added to a pool it cannot be removed without moving all the data off, destroying and rebuilding the pool and moving the data back again.

¶ Deduplication

Deduplication is done by finding ZFS blocks which have the same exact contents, and then adjusting the metadata for the files that use these identical blocks to point to the same single block, allowing the other block(s) to be returned to the free space list.

However, this then means that a great deal more care has to be taken rewriting these files in order to ensure that a change to a block in one file does not end up inadvertently impacting the matching blocks in other files.

Additionally, once you have added deduplication special devices to a pool, they cannot later be removed from the pool.

Deduplication is a VERY intensive operation, requiring significant memory, fast SSDs and quite a lot of CPU. Consequently, ZFS Deduplication is a highly specialised solution to what is typically a large enterprise requirement.

Uncle Fester therefore recommends that you avoid deduplication like the plague - and he can remember full well just how bad the plague was when it killed half his family in the 17C (and he sneers at Covid as being a pale immitation!!).

¶ Summary

If you are thinking about adding any type of special vDev, then think about it again because in most typical home / small business environments they are not only unnecessary but possibly add to the risk and complexity of the solution and thus add to the complexity of recovering your pools when things go wrong.

But if you genuinely believe that they would be beneficial to your own use case, the before you go ahead please do seek advice in the TrueNAS forums on both 1) whether they are indeed necessary or a good idea; and 2) the best way to configure them.

¶ Links

| Prev - 2.2.4 Redundant disks | Index | Next - 2.3 Choosing your Server Hardware |